Table of contents

- Why MLOps?

- Essential tools for each category that we might need to learn during your MLOps journey

- 1. Data Management & Versioning:

- 2. ML Frameworks:

- 3. MLOps Platforms & ML Lifecycle:

- 4. Data Pipelines:

- 5. Containerization & Orchestration:

- 6. CI/CD for ML:

- 7. Monitoring & Logging:

- 8. Distributed Training & Scaling:

- 9. Cloud Platforms (Optional but beneficial):

- 10. Experimentation & Reproducibility:

- 11. Community & Collaboration:

Why MLOps?

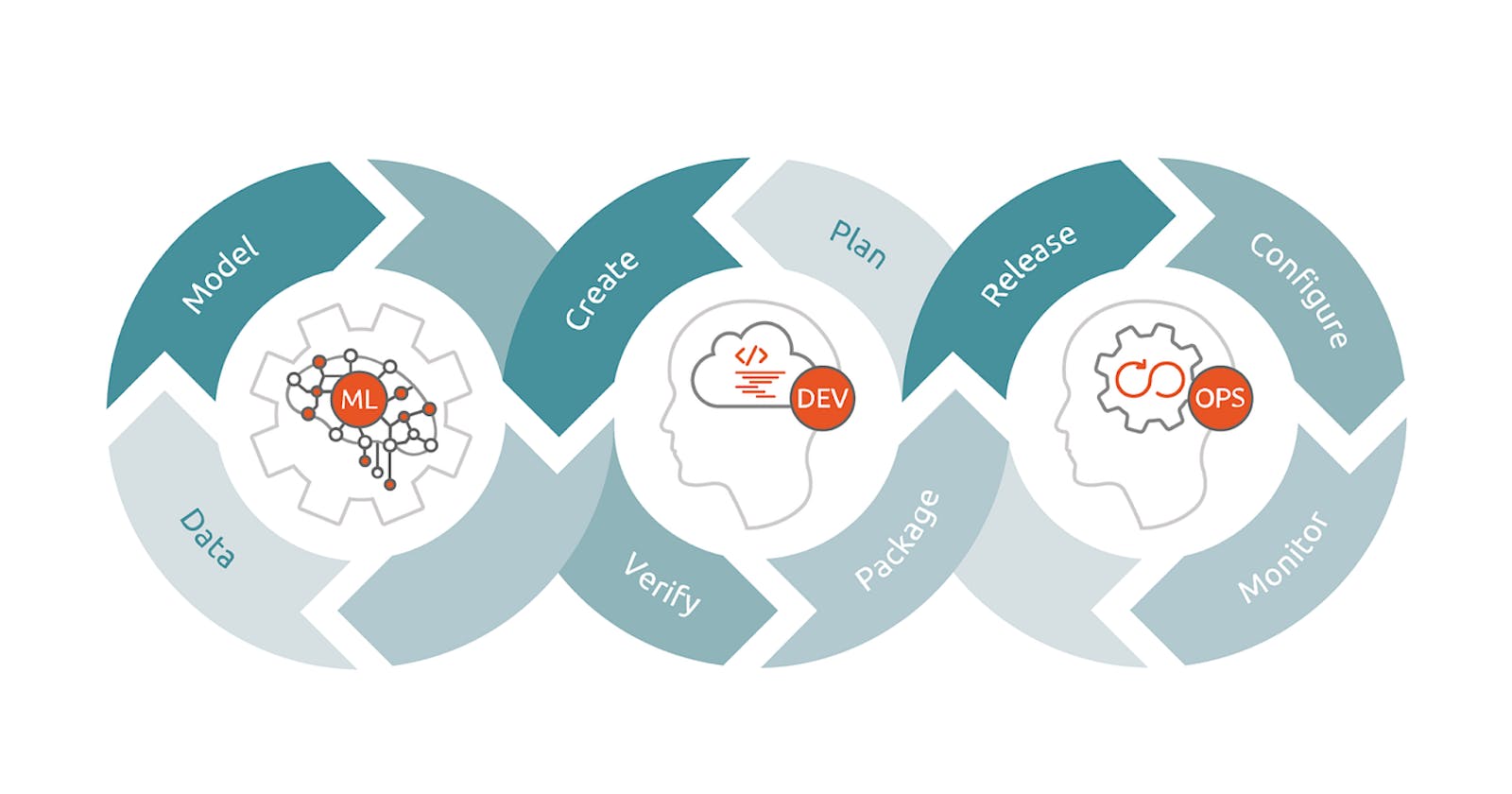

Just a brief touch on my motivations: With ML solutions becoming increasingly ubiquitous, the need for smooth deployment, monitoring, and scaling of these solutions is paramount. Enter MLOps, which strives to harmonize ML system development (Dev) and ML system operation (Ops). I see it as the natural evolution of my journey in the tech world.

Essential tools for each category that we might need to learn during your MLOps journey

1. Data Management & Versioning:

- DVC: A version control system for machine learning projects. Helps to keep data and model versions in sync.

2. ML Frameworks:

TensorFlow: An open-source framework developed by Google. It's widely used for various ML and deep learning tasks.

PyTorch: Developed by Facebook, another major deep learning framework.

Keras: A high-level neural networks API, written in Python and capable of running on top of TensorFlow, CNTK, or Theano.

Scikit-learn: Simple and efficient tools for data mining and data analysis.

3. MLOps Platforms & ML Lifecycle:

MLflow: An open-source platform that manages the end-to-end machine learning lifecycle.

Kubeflow: A Kubernetes-native platform for developing, orchestrating, deploying, and running scalable and portable ML workloads.

4. Data Pipelines:

Apache Airflow: A platform to programmatically author, schedule, and monitor workflows.

Apache Kafka: A distributed streaming platform useful for building real-time data pipelines.

5. Containerization & Orchestration:

Docker: A platform for developing, shipping, and running applications in containers.

Kubernetes: An open-source platform for automating deployment, scaling, and management of containerized applications.

NVIDIA Docker: For containerizing GPU-based applications.

6. CI/CD for ML:

Jenkins: An open-source automation server that can be used to automate various stages of ML pipelines.

GitLab CI/CD: Provides a robust framework for CI/CD pipelines.

GitHub Actions: CI/CD and automation directly within GitHub.

7. Monitoring & Logging:

Prometheus: An open-source systems monitoring and alerting toolkit.

Grafana: An open-source platform for monitoring and observability.

ELK Stack (Elasticsearch, Logstash, Kibana): For searching, analyzing, and visualizing log data in real time.

8. Distributed Training & Scaling:

Horovod: A distributed deep learning training framework.

Ray: An open-source, distributed computing system that can be used to scale ML workloads.

9. Cloud Platforms (Optional but beneficial):

AWS Sagemaker: A fully managed service that provides developers and data scientists with the ability to build, train, and deploy ML models.

Google AI Platform: A suite of services on Google Cloud Platform for building ML models.

Azure Machine Learning: A cloud service for building, training, and deploying ML models.

10. Experimentation & Reproducibility:

Weights & Biases: Helps in tracking experiments, visualizing metrics, and sharing findings.

Neptune.ai: A platform for ML experiment tracking and model registry.

11. Community & Collaboration:

Slack/Teams: For team collaboration.

Arxiv: For staying updated with the latest research papers.

Reddit's r/MachineLearning, Stack Overflow: For community discussions and problem-solving.